Self Cannibalizing AI

The podcast Hard Fork had a great conversation about how AI will start to consume what it creates. This self-cannibalization will harm future models, and the actions of search engines will only worsen it.

AI is trained on internet content. The percentage of content made by humans used to be, let’s say, 99.9%. That’s pretty pure. The latest GPT model, 4, was trained on data from 2021, so it’s a relatively pure data set.

As AI-generated content floods the internet, that ratio is going to shift. When AI bots get trained on AI content, it creates an entropic feedback loop where things only degrade.

If you’ve ever tapped the hell out of the predictive text above your phone keyboard, you’ve seen some form of this. It makes sense for a little while, but eventually, it’s a snake eating its tail, repeating the same phrase forever.

AI in search engines

But the situation is even worse than they mentioned in the podcast.

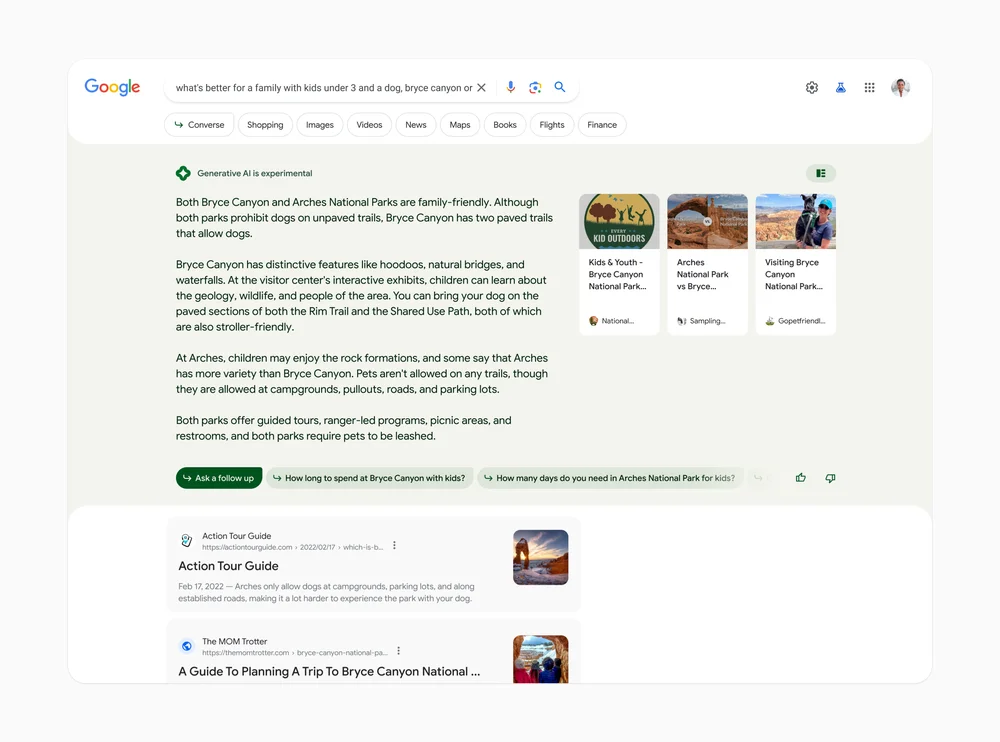

Both Google and Bing search plan to incorporate AI at the top of their search results. This is an incredibly short-sighted move.

These AI-generated answers seem to synthesize the first few search results. This is super convenient for users — they don’t need to click through to the website and get bombarded with ads and popups to get their answers.

The big problem for publishers

Those ads and popups are how the writers of this free content pay their rent. Either that or they use the traffic to sell other services or products. That can’t happen if an AI distills its content and answers the user’s question.

Over time, these sites will lose revenue, and writers will lose their jobs. Less human-made content will be created for all AI to be trained on. Meanwhile, AI-generated spam will continue to flood the web.

This is only made worse because many people are already using search engines less, as they turn to ChatGPT for answers.

Content is reduced, reused, recycled

We’re quickly approaching a problem where we have fewer human writers and far too many AI writers. We’ll be stuck with less creativity, tainted data, and our shared collection of knowledge will be worse for it.